Along with all of the things others have said, you also need to make sure you have a proper cooling setup. Server class cards almost never have fans because they are expecting the server to pull or push air across the heatsinks. A typical PC case will not provide proper airflow to a card that’s expecting a cool room and server class fan CFMs flowing across it.

Edit: There are often workstation SKUs of GPUs that include a fan. I’m not sure about the p100 though

Source: Server Admin

Gotcha! Thank you I thought it was wierd that there werent big blower motors fans and instead a heatsink. Ill make sure to set it up in the coldest part of the house and rig up a few more fans for much better airflow throughout the computer case.

Your case clearly supports full-size cards not half-height. You can usually buy whichever bracket you need if it’s a common card.

What video card are you getting? You are probably thinking single or double slot thickness cards. Your old card is double slot.

Also, is that just 3 DIMMS? Check the manual for specifics, but an unmatched pair could be tanking your memory speed worse than just having less memory.

I wanted to get an nvidia p100 Tesla 16gb for cheap vram and compute on a headless server. Some of the cards on eBay have the “full length” big bracket plate and some are ripped off because some PCs have a shorter plate system. I just want o make sure I don’t have any problems but it looks like a full size jut wanted some confirmation. I don’t know what a DIMMS is but if you mean the ram sticks, yes. I didnt know much about PCs when I installed them just kept putting them in for some reason installing a fourth made the system not run. I’ll have to figure that situation out sometime.

Ok, I wasn’t expecting a server-class card but it should be fine without its support bracket. Generic cases generally wouldn’t have a place to connect the support to. (Some might, but I can’t see the rest of the case. Your picture doesn’t actually show how much space you have to insert a longer card.)

The dimensions of the P100 is 10.5 inches in length and 4.4 inches in height, so you should be able to measure for clearance. My 7900 XTX is 11.3in, and it will fit in many towers so I have confidence that the P100 will fit in yours.

Still, It’s a long card so you might want to look around for a generic GPU support bracket to keep it from sagging over the long-term.

DIMMs (dual inline memory modules) are your ram sticks, yes. Typically, they run in pairs for best performance. So, generally, you would populate slot B and slot D for the first pair and slot A and C for the second pair. Regardless, the PC will generally still run with mismatched pairs, but it will clock itself down to the speed of the slowest module installed and may run in single-channel mode. This, in turn, slows the performance of the entire PC down by creating a bottleneck on the memory bus.

Edit: I haven’t thought about slot height in a while, but standard cases are going to be full-height cards. Half-height cards are more niche.

Thank you for all your help remotelove you are awesome!! Here’s a better shot of the space I’m working with. (Please dont yell at me about the dust its why I was heasitant to show I know how it looks lol)

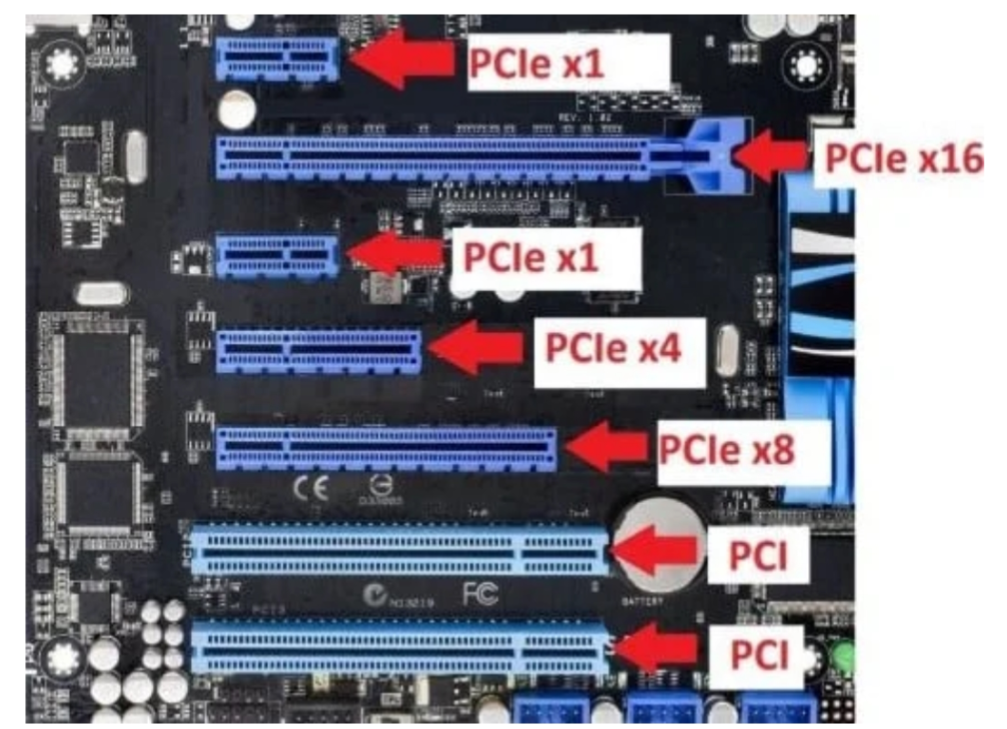

I don’t think space/length of cardwill be an issue. While I still have your attention I wanted to ask. In as simple an explanation as you can muster, what do I need to connect multiple GPUs to one motherboard? I don’t quite understand how pcie lanes and bridges and all that work but from what I read its possible and you can use different manufactuer GPUs together for the case I’m doing. But how?? Theres only one slot. I know some boards have 2 or even 4 but theres homlabs with like 10 p100s chained together

Please tell me you’re going to clean the case

Im going to clean the case. A few fingerprint smudges and a “smokeydope wuz here” drawn in should be enough dust removed

In all seriousness yep I plan to give it a real cleaning.

I figured as much lol I saw a few pictures and I kept telling myself “don’t talk about the dust don’t be that annoying person who talks about the dust” but then when I saw this last picture I went “goddamnit I can’t help it.”

“I don’t need it. I definitely do not need it. I… don’t need it…”

This ia bugging me too

I agree, it seems that you have enough room for the length of the card.

For compute-only GPUs, you just plug them in any way you can if your power supply has enough wattage to support it. You can even get 16x to 1x pcie slot adapters for just that purpose. 16x vs 1x basically means slot length, in our context. (It’s commonly done for Bitcoin mining.) Compute is different as it generally doesn’t have the low latency requirements that a game would. PCIe is very robust when it comes to backward compatibility.

When it comes to running and AMD card and NVIDIA card together, I know windows handles it OK. I am not sure about Linux compatibility or what issues you may see.